This is mark Joseph “young” blog entry #195, on the subject of Probabilities in Dishwashing.

I was going to call this, What Are the Odds?, but that’s too useful a title to use for this. Actually, almost every time my bill rings up to an exact dollar amount, ending “.00”, I say that to the cashier, and usually they have no idea, so usually I tell them. But I’m a game master–I’ve been running Multiverser™ for over twenty years, and Dungeons & Dragons™ for nearly as long before that. I have to know these things. After all, whenever a player says to me, “What do I have to roll?”, he really means “What are the odds that this will work?” Then, usually very quickly by the seat of my pants, I have to estimate what chance there is that something will happen the way the player wants it. So I find myself wondering about the odds frequently–and in an appendix in the back of the Multiverser rule book, there were a number of tools provided to help figure out the odds in a lot of situations.

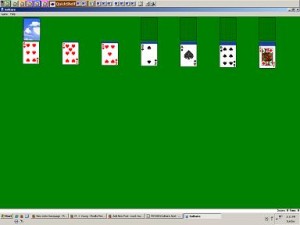

And so when I saw an improbable circumstance, I immediately wondered what the odds were, and then I wondered how I would calculate them, and then I had the answer. It has something in common with the way I cracked the probabilities of dice pools decades back (that’s in the book), but has more to do with card probabilities, as we examined in web log post #1: Probabilities and Solitaire, than with dice.

So here’s the puzzle.

At some point I bought a set of four drinking cups in four distinct colors. I think technically the colors were orange, green, cyan, and magenta, although we call the cyan one blue and the magenta one red, and for our purposes all that matters is that there are four colors, A, B, C, and D. We liked them enough, and they were cheap enough, that on my next trip to that store I bought another identical set. That means that there are two tumblers of each color.

I was washing dishes, and I realized that among those dishes were exactly four of these cups, one of each of the four colors. I wondered immediately what the odds were, and rapidly determined how to calculate them. I did not finish the calculation while I was washing dishes, for reasons that will become apparent, but thought I’d share the process here, to help other game masters estimate odds. This is a problem in the probabilities of non-occurrence, that is, what are the odds of not drawing a pair.

The color of the first cup does not matter, because when you have none and you draw one, it is guaranteed not to match any previously drawn cup, because there aren’t any. Thus there is a one hundred percent chance that the first cup will be one that you need and not one that you don’t want. Whatever color it is, it is our color A.

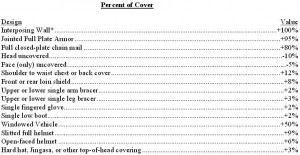

In drawing the second cup, what you know is that there are now seven cups that you do not have, one of which will be a match. That means there is one chance in seven of a match, six chances in seven of not matching. This is where I stopped the math, because I hate sevenths. I know that they create a six-digit repeating decimal that shifts its position–1/7th is 0.1̅4̅2̅8̅5̅7̅, and 2/7ths is 0.2̅8̅5̅7̅1̅4̅, and in each case the digits are in the same sequence, but I can never remember that sequence (I don’t use it frequently enough to matter, and I can look it up on the table in the back of the Multiverser book as I just did here, or plug it into a calculator to get it). So the probability of the second cup matching the first–of drawing the other A–is 14.2̅8̅5̅7̅1̅4̅%, and the probability of not drawing a match is 85.7̅1̅4̅2̅8̅5̅%.

So with a roughly 86% chance we have two cups that do not match, colors A and B, and we are drawing the third from a pool of six cups, of which there are one A, one B, two Cs and two Ds. That means there are two chances that our draw will match one of the two cups we already have, against four chances that we will get a new color. There is thus a 33.3̅3̅% chance of a match, a 66.6̅6̅% chance that we will not get a match.

We thus have a roughly 67% chance of drawing color C, but that assumes that we have already drawn colors A and B. We had a 100% chance of drawing color A, and an 86% chance of drawing color B. That means our current probability of having three differently-colored cups is 67% of 86% of 100%, a simple multiplication problem which yields about 58%. Odds slightly favor getting three different colors.

As we go for the fourth, though, our chances drop significantly. There are now three colors to match, and five cups in the deck three of which match–three chances in five, or 60%, to match, which means two in five, or 40%, to get the fourth color. That’s 40% of 67% of 86% of 100%, and that comes to, roughly, a 23% chance. That’s closer to 3/13ths (according to my chart), but close enough to one chance in four, 25%.

A quicker way to do it in game, though, would be to assign each of the eight cups a number, and roll four eight-sided dice to see which four of the cups were drawn. You don’t have to know the probabilities to do it that way, but if you had any matching rolls you would have to re-roll them (one of any pair), because it would not be possible to select the same cup twice. In that sense, it would be easier to do it with eight cards, assigning each to a cup.

I should note that this math fails to address the more difficult questions–first, what are the odds that exactly four of the eight cups would be waiting to be washed, as opposed to three or five or some other number; second, how likely is it that someone has absconded with one of the cups of a particular color because he likes that color and is keeping it in his car or his room or elsewhere. However, the first question is an assumption made in posing the problem, and the second question is presumably equally likely to apply to any one of the four color cups (even if I can’t imagine someone taking a liking to the orange one, someone in the house does like orange). However, it should give you a bit of a better understanding on how to figure out the odds of something happening.

For what it’s worth, the probability of the cost of the purchase coming to an even dollar amount, assuming random values and numbers of items purchased, is one chance in one hundred. That, of course, assumes that the sales tax scheme in the jurisdiction doesn’t skew the odds.

[contact-form subject='[mark Joseph %26quot;young%26quot;’][contact-field label=’Name’ type=’name’ required=’1’/][contact-field label=’Email’ type=’email’ required=’1’/][contact-field label=’Website’ type=’url’/][contact-field label=’Comment: Note that this form will contact the author by e-mail; to post comments to the article, see below.’ type=’textarea’ required=’1’/][/contact-form]